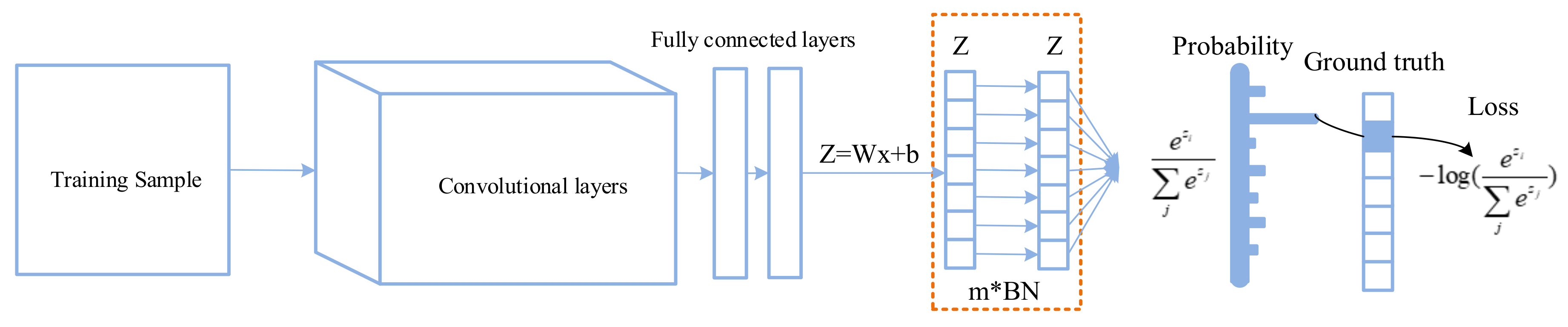

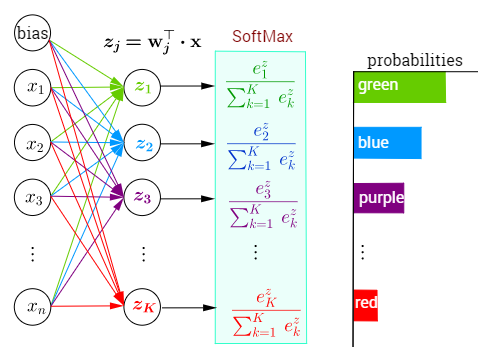

The structure of neural network in which softmax is used as activation... | Download Scientific Diagram

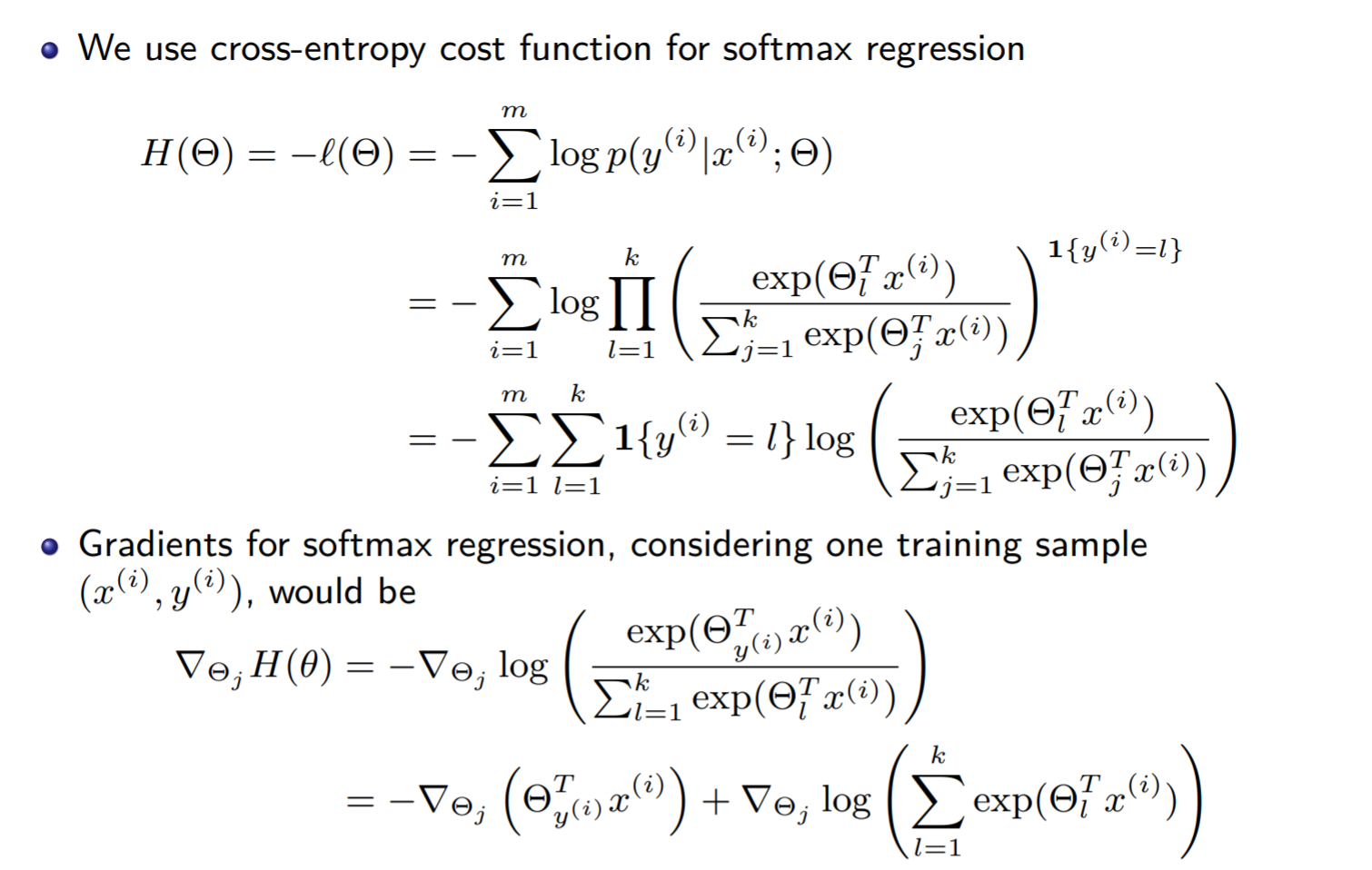

Applied Sciences | Free Full-Text | Improving Classification Performance of Softmax Loss Function Based on Scalable Batch-Normalization

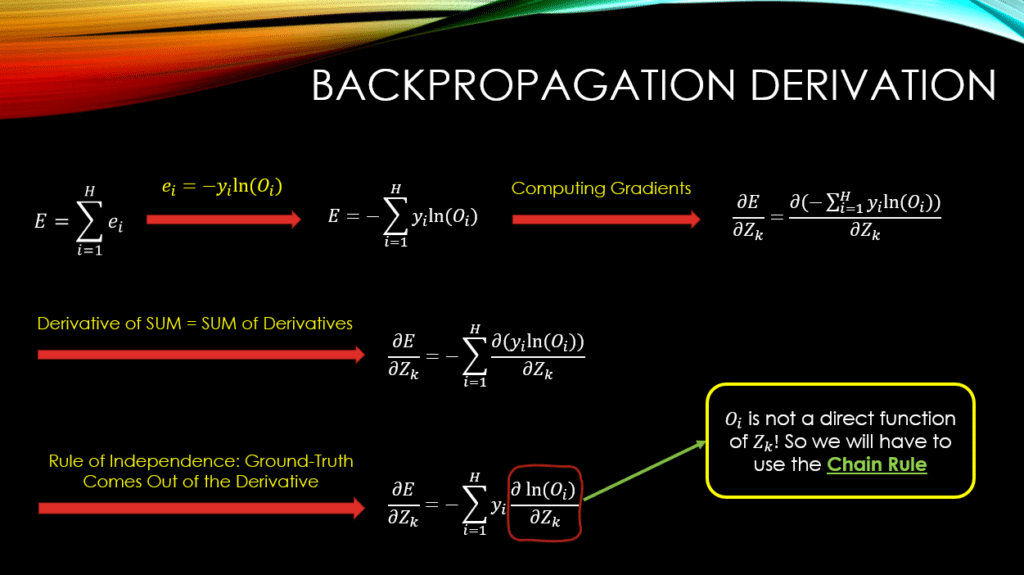

Convolutional Neural Networks (CNN): Softmax & Cross-Entropy - Blogs - SuperDataScience | Machine Learning | AI | Data Science Career | Analytics | Success

![PDF] Rethinking Softmax with Cross-Entropy: Neural Network Classifier as Mutual Information Estimator | Semantic Scholar PDF] Rethinking Softmax with Cross-Entropy: Neural Network Classifier as Mutual Information Estimator | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/8af471bfeb34dd5f024e5d1a2c46daed91a0d27a/7-Figure1-1.png)

PDF] Rethinking Softmax with Cross-Entropy: Neural Network Classifier as Mutual Information Estimator | Semantic Scholar

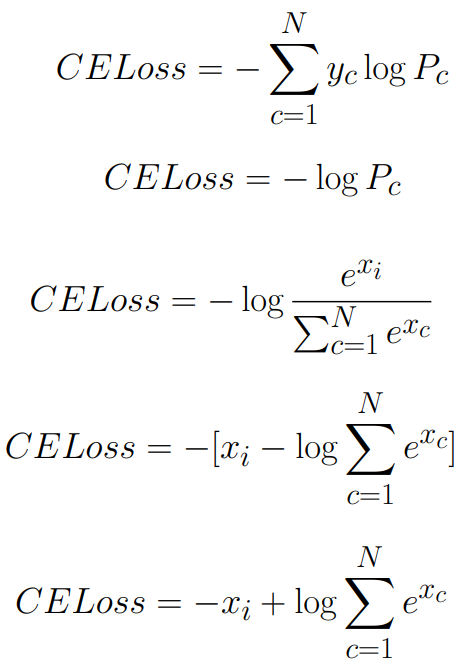

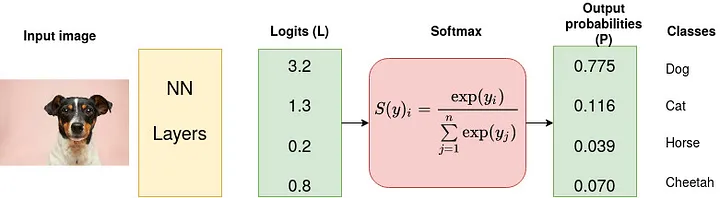

Why Softmax not used when Cross-entropy-loss is used as loss function during Neural Network training in PyTorch? | by Shakti Wadekar | Medium

objective functions - Why does TensorFlow docs discourage using softmax as activation for the last layer? - Artificial Intelligence Stack Exchange

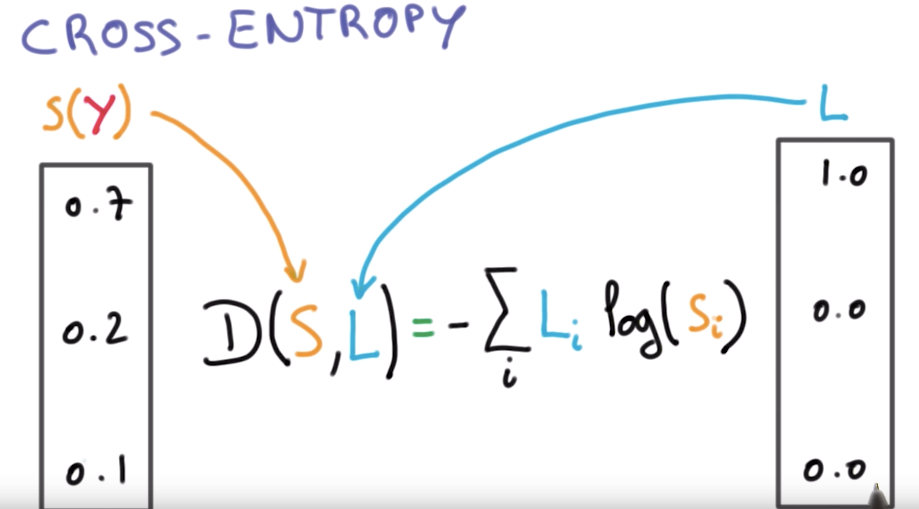

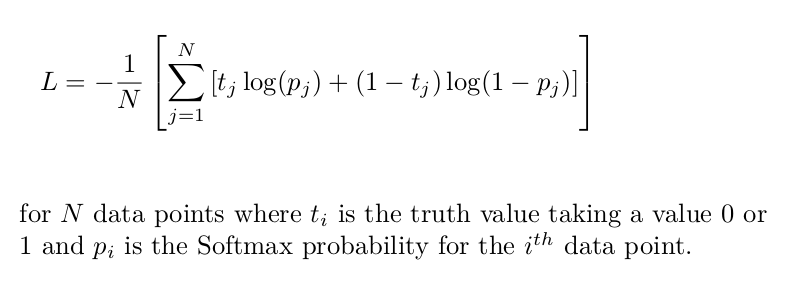

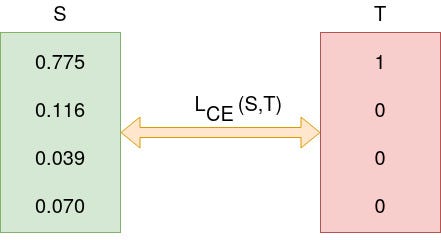

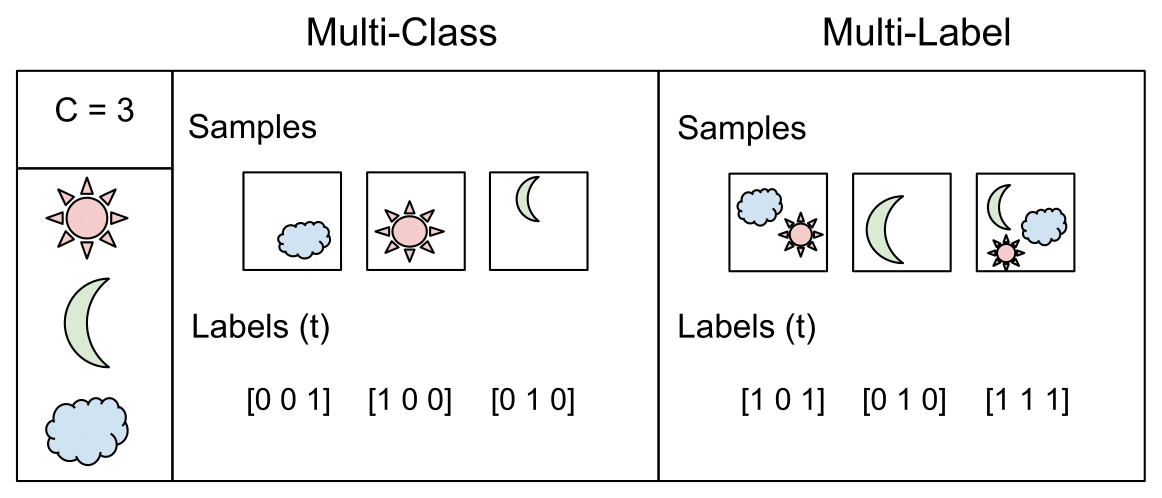

Cross-Entropy Loss Function. A loss function used in most… | by Kiprono Elijah Koech | Towards Data Science

Cross-Entropy Loss Function. A loss function used in most… | by Kiprono Elijah Koech | Towards Data Science

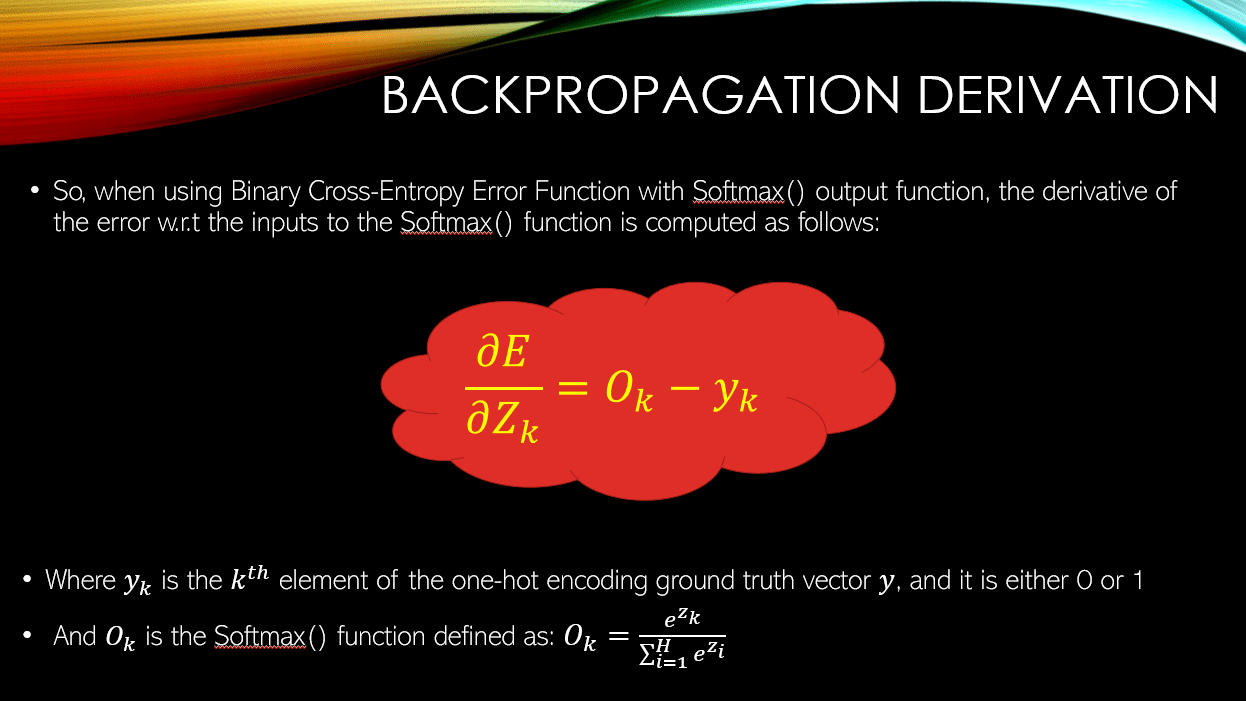

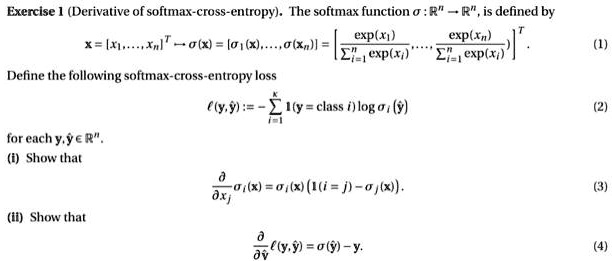

SOLVED: Texts: Exercise 1 (Derivative of softmax-cross-entropy). The softmax function, denoted as σ(x), is defined by σ(x) = exp(xn) / Σ(exp(xi)) for i = 1 to n Let's define the following softmax-cross-entropy

machine learning - What is the meaning of fully-convolutional cross entropy loss in the function below (image attached)? - Cross Validated

![DL] Categorial cross-entropy loss (softmax loss) for multi-class classification - YouTube DL] Categorial cross-entropy loss (softmax loss) for multi-class classification - YouTube](https://i.ytimg.com/vi/ILmANxT-12I/hq720.jpg?sqp=-oaymwEhCK4FEIIDSFryq4qpAxMIARUAAAAAGAElAADIQj0AgKJD&rs=AOn4CLDP2Mcvs9IEnETkFGUgaLNZ2t-iGg)